Craft Code is up

The adventure begins

As I mentioned here recently, I’ve been a bit slow in posting (OK, a lot slow) because I’ve been deep in the weeds trying to build as close to the perfect site as I can manage.

That site is Craft Code.

Sustainability

By perfect I mean, among other criteria, a site that meets all of the Web Sustainability Guidelines (WSG: currently still in draft — I’m an early adopter). It that’s a bit too complex, check out the Web Sustainability Manifesto. Why not sign it while you’re there? Get in early.

There are various tools for checking some of the requirements, especially carbon counts. Typically, most Craft Code pages are around 0.01-0.02 grams carbon for the first load, and less than 0.01 gram on subsequent page loads. Nice!

One such tester is the Website Carbon Calculator. According to this, the typical page on Craft-Code.dev is cleaner than 99% of web pages tested. We take a hit for not using “green hosting”, but that’s not quite so simple. The Craft Code site is served from Vercel, which uses AWS and, I think, Azure.

But both Amazon and Microsoft claim to be 90% green. Bullshit? Who knows.? But there’s more to it. Vercel means we serve from the edge, which saves energy. And we use serverless functions, which are far more efficient than keeping a hosted server running 24/7. Craft Code is a static JAMStack site, so that also saves energy.

Is it a wash. I discussed this with some sustainability experts (Craft Code is featured on the lowwwcarbon showcase). They admitted that it’s arguable, which is how I bypassed the requirement to use green hosting to be listed.

Beacon is another site measuring carbon. It measures both first visit and return visits and even gives a grade. All the pages on Craft-Code.dev get an A+. We’re doing something right. We provide a link to proof at the bottom of every site page, which also raises awareness and is a bit of a challenge to other sites.

Accessibility (a11y)

Of course, if one wants to fulfill the WSG one must first fulfill the Web Content Accessibility Guidelines (WCAG) 2.2. My goal is AAA – the highest level. Much of this can be tested with “overlays” such as axe DevTools. I use a pro account and my test show zero errors or issues for AAA compliance. Nice!

It’s not enough to use only the overlay, so, of course, I am steadily working (and reworking) my way through the entire list in the WCAG Quick Reference to make sure I’ve done it all.

But the big items are there:

At least 7.1 color contrast (AAA).

The smallest font size is 16px. The font sizes increase as the screen size does. I use the Utopia Fluid Type Scale Generator.

The pages are written at a lower secondary school level, but typically even lower. Think 4th grade. This is for people with reading or cognitive disabilities and just makes it simpler for everyone. I use the Hemingway Editor to polish the content and get word count, reading time, and grade level. I’d love to automate this boring task.

But that’s not enough. The site uses a lot of technical terms. So I added a glossary and linked the terms on each page to their glossary entry (using

rel=”glossary”and a dotted underscore). And many glossary entries link further to Mozilla Developer Network, primary sources, or Wikipedia for those who want more depth.I created (with some help from a real designer) a visual language for the site and implemented it with SVGs (hand-coded). In addition to visual interest and mnemonic/cognitive benefits, the SVGs make the site very fast indeed.

The site has a Feedback form to permit users to report bugs and issues, make suggestions, complain, or whatever else trips their triggers.

There is a FAQ page and a form that allows users to ask questions. This kind of interaction is, I believe, essential to honest accessibility.

The pages are keyboard navigable. The forms provide clear help. There is a print stylesheet that injects the URL of page links next to the label, and provides a QR code to allow you to go straight to the web page from the printed page. Try it! (The QR code is visible even on the screen page so you can jump to another device.)

And much, much more. Nearly thirty years of experience and almost a year of detailed thinking, investigation, and trial and error have gone into this site. But the next one (ScratchCode) will take one tenth that time.

Performance

Performance is a key part of sustainability, but also accessibility and UX. I was just reading an article on the psychology of web page performance. TL/dr: it is important!

I do lots of different checks to ensure that the Craft-Code.dev pages are as fast as possible. One of the earliest is to check Lighthouse in private mode. Here is a typical result (for mobile):

I am currently running through SEO tests. Interestingly (and frustratingly) different testers give different advice. How to know which is correct? Are any of them? And they ain’t cheap, either. The best I’ve found so far, which appears to be SEO Tester Online, is at least 26 euros a month. And that’s limited to one site.

You’re killing me, man. I am unemployed (in pecuniary terms).

These tools seem to be geared to people who want to make a lot of money from their websites. That’s not my goal at all, but I do want people to find the site. Tough call. That said, SEO is the one area where I’m happy with “good enough”. Will update readers later when I know more.

But even with these more thorough tools, I’m very, very close to 100% success. Stay tuned.

And some of the advice? My headings are too short? Give me a break. I’m not going to keyword-stuff my headlines (to the detriment of readers) just to indulge the algorithm gods.

And there is so much more, such as full use of the <picture> element wherever I have raster images. This is one image:

<picture>

<source

media="(min-width: 434px)"

srcset="/images/optimized/768/rr-temptation.avif 1x,

/images/optimized/1536/rr-temptation.avif 2x,

/images/optimized/2304/rr-temptation.avif 3x"

type="image/avif"

>

<source

media="(min-width: 434px)"

srcset="/images/optimized/768/rr-temptation.webp 1x,

/images/optimized/1536/rr-temptation.webp 2x,

/images/optimized/2304/rr-temptation.webp 3x"

type="image/webp"

>

<source

media="(min-width: 434px)"

srcset="/images/optimized/768/rr-temptation.png 1x,

/images/optimized/1536/rr-temptation.png 2x,

/images/optimized/2304/rr-temptation.png 3x"

type="image/png"

>

<source

media="(min-width: 434px)"

srcset="/images/optimized/768/rr-temptation.jpeg 1x,

/images/optimized/1536/rr-temptation.jpeg 2x,

/images/optimized/2304/rr-temptation.jpeg 3x"

type="image/jpeg"

>

<source

media="(max-width: 433px)"

srcset="/images/optimized/384/rr-temptation.avif 1x,

/images/optimized/768/rr-temptation.avif 2x,

/images/optimized/1152/rr-temptation.avif 3x"

type="image/avif"

>

<source

media="(max-width: 433px)"

srcset="/images/optimized/384/rr-temptation.webp 1x,

/images/optimized/768/rr-temptation.webp 2x,

/images/optimized/1152/rr-temptation.webp 3x"

type="image/webp"

>

<source

media="(max-width: 433px)"

srcset="/images/optimized/384/rr-temptation.png 1x,

/images/optimized/768/rr-temptation.png 2x,

/images/optimized/1152/rr-temptation.png 3x"

type="image/png"

>

<source

media="(max-width: 433px)"

srcset="/images/optimized/384/rr-temptation.jpeg 1x,

/images/optimized/768/rr-temptation.jpeg 2x,

/images/optimized/1152/rr-temptation.jpeg 3x"

type="image/jpeg"

>

<img

alt="You canʼt beat Russian Riverʼs Temptation ale"

class="optimized-img"

height="1603"

src="/images/optimized/768/rr-temptation.jpeg"

width="768"

>

</picture>Yum. Russian River. One of the few things I miss about ‘merika.

That’s a lot of effort to ensure that the most performant image is loaded. (Note: there is some controversy over whether the extra cost of processing for AVIF is compensated for by a smaller size insofar as sustainability is concerned. Am investigating.)

Standards compliance

I’m doing my best to write smart, standards-compliant, semantic HTML and standards-compliant CSS. The best validators for this are probably the W3C validators. It’s a bit tricky, though.

For HTML, I am 100% standards compliant, I think (I need to retest regularly because I break this a lot). But I do get a complaint about a / in an empty element. I also get a ping on an adapt-symbol attribute, but that’s just me experimenting. The WAI-Adapt guidelines are still germinating.

The irony of getting this slash warning from the folks who brought you XHTML! Granted, I’m serving the pages as text/html, but that’s a bit picky, no? And I can’t help it. I’m using Astro which means JSX, and that’s an artifact of JSX. Nothing’s perfect, I guess.

The CSS validator is a bit more troubling. I’m using stylelint on every push – I use Biome for JS/TS – so I know the CSS is good. But I also am using some advanced CSS (with fallbacks), such as :has() (MDN). The W3C validator doesn’t recognize it, so I get a slew of errors. But as long as I’m only getting those errors, then I think I’m 100% compliant.

Doesn’t mean my CSS is worth a shit (see below), but it’s a start.

The Web Developer extension is really helpful for this kind of thing. And it has a (very slow) link checker (W3C again). Maybe run it overnight?

The code

The Craft-Code.dev site is pure semantic HTML, CSS, and JavaScript. There are zero front-end dependencies. I repeat:

There are zero front-end dependencies.

There are zero front-end dependencies.

I do use a polyfill (but almost not at all) for JS Temporal because … did I mention that I’m a very early adopter? Hopefully, that will go away soon. JS dates suck.

But there are no serious front-end libraries or frameworks. No React. No Redux. No Ramda. No RxJS. Also, nothing like MUI – I built my own Astro component library in the process of building the Craft Code site (I’ll post a link when it’s out of alpha).

Structure and semantics

I pay very close attention to the page structure and the use of proper HTML Elements. I’ve avoided Web Components so far because using Astro gives me a component architecture without having to give up on vanilla code, and it’s easier than dealing with shadow shit.

Accessibility means using correct landmarks: <header>, <nav>, <main>, and <footer> mainly, but also <aside> and <section>. Each of these should have a heading (even if it is not visible on the page), and those headings – <h1> to <h6> – should be properly nested.

Last I checked, the majority of web pages online failed this simple directive despite being reminded of it continually for more than two decades. So many devs are either stupid or insouciant. Craft Code is not for them.

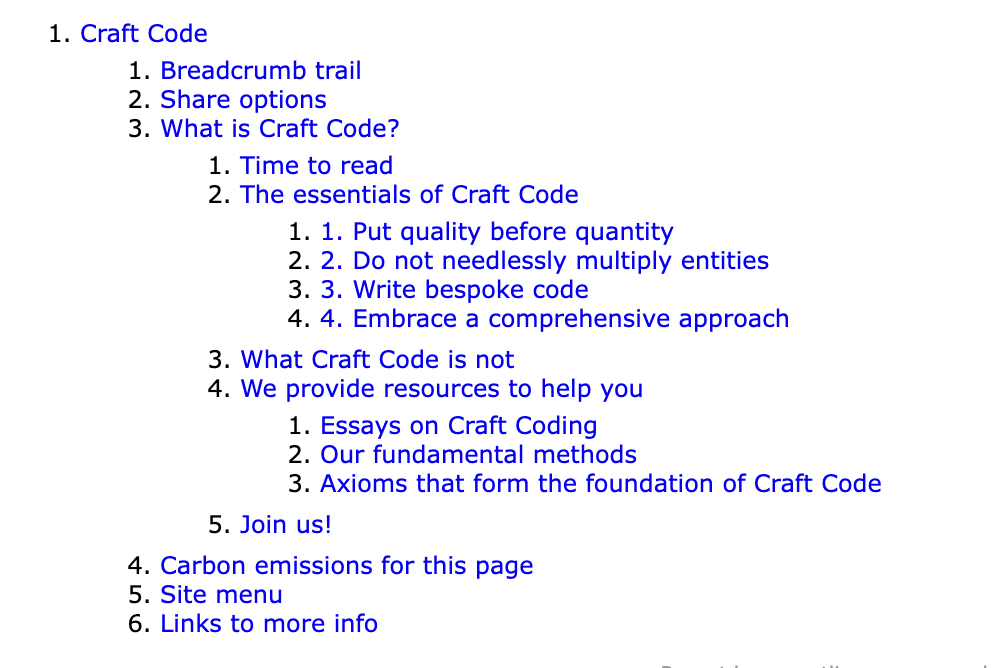

A neat toy for checking that you’ve done this well is the HTML Outliner extension. Here is the Craft-Code home page outline:

Now that’s proper nesting!

I use the <article> element with <section> elements in it. My various navigation elements all use <nav>. I use lists (typically <ul>) inside the navigation to make the separation of links clear. I never link to the current page.

My forms use <fieldset> elements freely with <legend>. Important images, code snippets, graphs, etc. are in <figure> elements with <figcaption>. Tables are used for actual tabular data, not for layout and I use the <thead>, <tbody>, and <tfoot> elements (no tables needed so far – avoid them whenever possible).

The glossary uses the description list elements – <dl>, <dt>, and <dd> – but also the <dfn> and <cite> elements. All links to the glossary use rel=”glossary” and the glossary is linked to from the head of every site page.

Oh, and so much more! Every element on every page has been examined and carefully considered and researched and double-checked. Perfection, remember? Unattainable, but a man can dream.

I use a ton of metadata. Title, canonical link, charset, viewport, description, keywords, robots, author, publisher, color-scheme, theme-color (light and dark), icon (favicon), “me”, and a whole raft of structured data. And OpenGraph and Twitter Card.

<link rel="me" href="https://x.com/CraftCodeDev">Check out the HTML of our pages by element for a full list.

Structured (linked) data

Using the schemas on schema.org, I have added a plethora of structured data to the pages. These describe the WebSite, WebPage, Article, Quotations, Images, Organizations, Persons, etc.

Schema.org provides a nice validator for structured data (there are others, such as Google’s Rich Test Results page). Of course, sometimes they disagree. Hrm.

By keeping pages relatively short, I keep load times short as well, which gives me room to make the pages machine-readable. The small amount of content on each page is misleading. Each page is positively loaded with data.

Forms

There are multiple forms on the site. All of them use the built-in validations via semantic elements such as <input type=”email”> or attributes such as size, maxlength, and pattern. The form’s action attribute points the submission to a Vercel (AWS) serverless function.

If the submission is a normal HTTP form submission, then the function processes the data and uses HTTP redirects (303) to redirect to error or success pages (yes, I have separate pages for each error).

But I also have a JavaScript module that every page with a form loads (pretty much every site page has a form). This script looks for <form> elements and then adds the novalidate attribute and event handlers (using delegation) to check form validity using the element’s own checkValidity then parses the field’s validity and uses the field’s attributes to generate a help message.

The validities:

export const validities = [

"badInput",

"customError",

"patternMismatch",

"rangeOverflow",

"rangeUnderflow",

"stepMismatch",

"tooLong",

"tooShort",

"typeMismatch",

"valueMissing",

]The help message is then displayed in the help box under the field label (or in the legend for radio button groups, for example). This help box has the aria-live attribute set so that screen readers will pick up changes to the help message.

It also intercepts the submission and uses a call to fetch to call the same serverless function. (It gets the URL from the form’s action attribute – clever, no?) The function sees that the caller is an XmlHttpRequest and so responds with JSON and HTTP codes 422, 200, or 204 (or, ack, 500).

The validator script uses document.createElement and other DOM methods to insert error messages or replace the form with a success message.

Hence the forms work and validate even with JavaScript disabled. But with JS, they are both more helpful and more accessible. Progressive enhancement.

With JavaScript:

Without JavaScript:

Not perfect, but perfectly usable.

All JavaScript on the Craft Code site is used for progressive enhancement. Everything (e.g., the slide-out menu) should work without it, just maybe not as sweetly.

The slide-out menu is a good example. It works by CSS alone. When you click the menu trigger (hamburger), you are toggling a checkbox input. This uses CSS :has() to open the menu. JS enhances this to toggle a class on body so if your browser doesn’t yet support :has(), it still works. The only shortcoming is an older browser with no :has() support and JS disabled. But you can still tab to the menu and it opens!

Is it perfect? Oh, hell no. Compromises everywhere. Robbing Peter to pay Paul. For every three needs, pick two. That sort of thing. And always guessing, guessing.

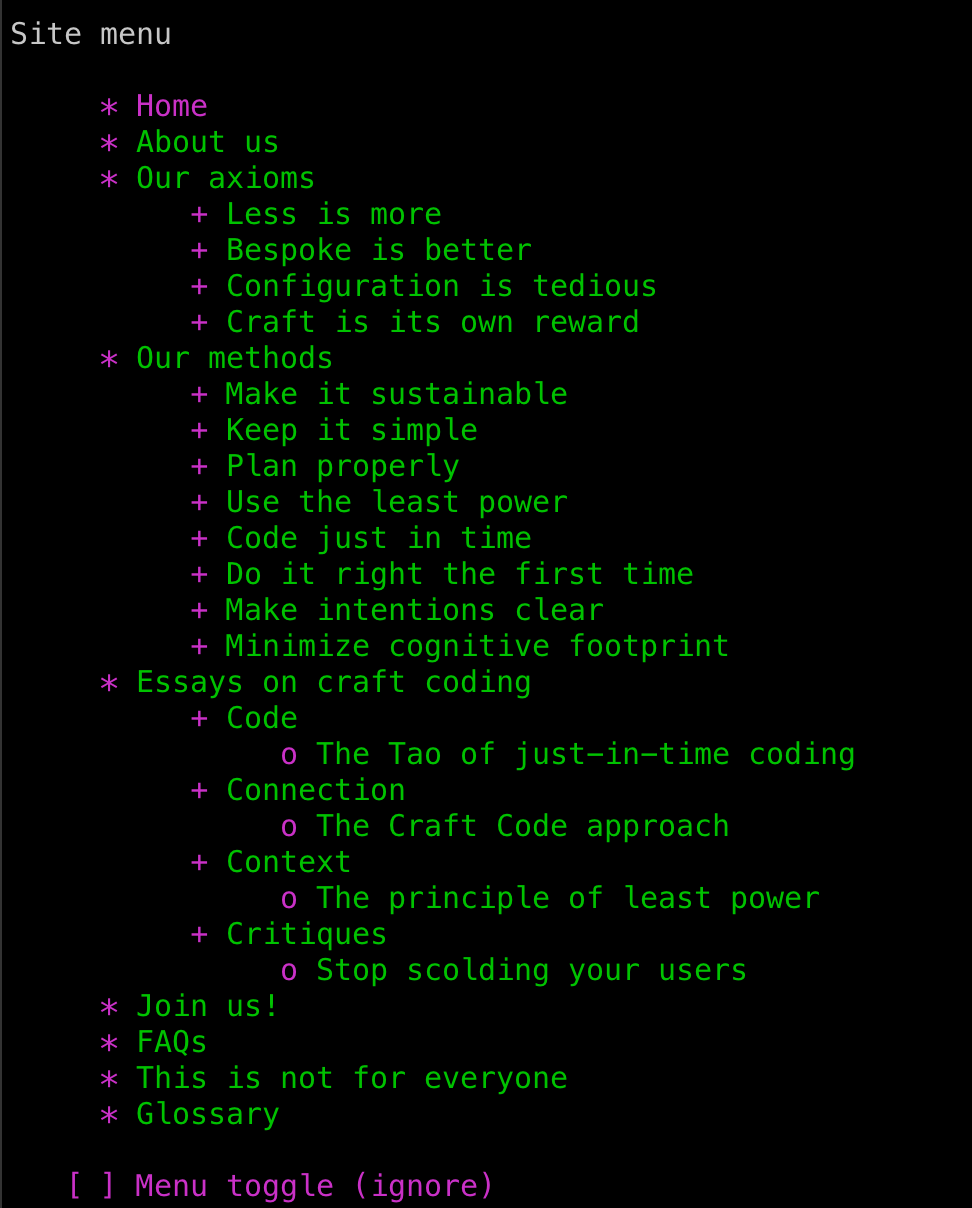

With both JS and CSS disabled (think text-only browser like Lynx), the menu appears at the bottom of the page:

You can see the menu toggle checkbox (which does nothing on Lynx) at the bottom.

It’s responsive

I’m not a fan of “mobile-first” design. This is a way to force lazy coders to consider what their desktop sites look like on mobile. That’s the good.

But we shouldn’t need a “mobile-first” approach to get us to consider a wide range of screen resolutions, sizes, and aspect ratios. It’s not binary, dammit! But devs love 1/0 approaches. I prefer to take that mathematically: 1/0 is infinity.

I’m still perfecting things on Craft Code (my to do list is huge), but it must work on a phone. Tablets, too.

The truth, however, is that HTML is responsive by default. Let me repeat that:

HTML is responsive by default.

What we as coders do, mostly, is build a perfectly-responsive HTML page/component, and the immediately use CSS to ruin the responsiveness because we’re only checking/prioritizing for a particular screen.

So here is the Craft Code approach:

Always begin with pure HTML. Use semantic HTML. Structure the page properly with landmarks and headings. Check it with accessibility tools. Notice how it works just fine on every browser, even Lynx, as primitive a browser as you can find. Build the whole damn site/page/component in HTML alone first.

Think hard about landmarks! Use

<header>,<main>, and<footer>to divide up the page. Use<nav>,<aside>, and<section>where they make sense. Nest headers<h1>to<h6>properly (you will never really need to go further than<h4>and rarely that far).Use ordered lists (

<ol>) for lists where the order is important, and unordered lists (<ul>)otherwise. For lists where each term is followed by a description or definition, use a description list (<dl>) and possibly the<dfn>element. See the Craft Code glossary pages.Use tables for tabular data, not for layout. Tabular data generally has column or row headers, so use the right elements and attributes. But try to avoid tables as much as possible (unless very small). They are difficult to grasp and should be a last resort. YAGNI.

Use figures (

<figure>) with captions (<figcaption>) to wrap graphs, charts, images, code snippets, etc.Design forms and controls very carefully. There is a great deal of information available on how to design forms properly, including accessibility and usability (UX) concerns. Group controls with

<fieldset>and use the<legend>element. Use<label>property and avoid theplaceholderattribute. Do your homework.Use the details element (

<details>) with<summary>for digressions and information that can usually be left hidden. With a touch of JavaScript enhancement, these can make good accordion components. We use them on the FAQ page (open all/close all JS enhancement coming soon).Use the picture element (

<picture>) with<source>elements to provide image options with different codecs, sizes, resolutions, etc. You can use media queries! (See above code.) Or use thesrcsetattribute on<img>. Make it as performant as possible. Tools such as Lighthouse and image optimizers (or services) help.Use the rel attribute (e.g.,

rel=”external”,rel=”glossary”) on links (<a>and<link>), which makes adding CSS easier as well as helping user agents to understand the links.Spend a good amount of time on metadata in the

<head>element. Consider all the potential<meta>and<link>elements (charset, viewport, etc.). Add JSON-LD if possible (see schema.org).

Note that you have, if you inserted your content and maybe a few progressive-enhancement scripts for, say, form elements, 90% of your website done and it works on all browsers! No exceptions. Now it’s time for CSS.

Use the most basic CSS first. Check that things work on any and all viewport sizes and both in portrait and landscape orientations. Set your fonts (we use Modern Font Stacks for speed).

If possible, test on older browsers. Use caniuse.com to get an idea when various CSS features were implemented in different browsers. Gives you a good idea how many browsers are out there. And many are missing, such as Arc, Brave, Epic, Mullvad, Responsively, Tor, or Vivaldi, to name a few.

Progressively enhance your CSS using newer features, but wrap them in

@supports()to avoid messing up the CSS for earlier browsers. You can comment them out temporarily to see the difference.You can use BEM or equivalent if you like. Personally, I like classes. I put classes based on the name of the component on each key element. I don’t need to put long class names. If I need to reach into an component I just use the parent’s class as well:

.subscribe-form .email-input. If you need to override one of these it is easy to increase the specificity by, for example, including a tag name or two:form.subscribe-form input.email-input. In extreme cases, use an ID. Despite what many say, specificity is your friend.Focus very hard on not destroying responsiveness! Test, test, test.

CSS Properties are great for theming. Always provide fallbacks (call it your default style).

Andy Bell has an interesting approach, though he seems to have disappeared recently. We appear to be edging apart.. I also like Every Layout. And I’ve learned some good approaches from Kevin Powell. Sigh … so much refactoring to do.

Finally, add your JavaScript. I use TypeScript and Astro to get bundling, a cheap component architecture, and TS transpiling, just as I use Vercel to get easy serverless functions. By this point the site is 99% done and you are just adding progressive enhancement. Unless you’re building some kind of complex app such as a spreadsheet, that is. Or a game. But more on that later.

Use the Web APIs! Doing the work natively in the user agent is much more performant than downloading a huge JS library to do what the browser already knows how to do.

For forms, you have the

validityproperties and theFormDataobject, both of which make forms really easy. Why reinvent the wheel?For modification of the DOM, creating elements, adding attributes, inserting and removing them, updating them, etc. is much faster with

createElement, etc. If you find it onerous, write wrapper functions! You’re a programmer, are you not?I have been experimenting with a very simple pubsub system using browser events (including custom ones) and event delegation. There are some exceptions, but you can catch almost everything at the document level and use the event object to figure how how to respond. Coming soon?

Make sure that everything works without JavaScript, even if that means trips to the server when JS is disabled.

Check, check, check for accessibility concerns! Test on older browsers and even Lynx. Make sure your JS “enhancement” doesn’t break things.

Keep your JS to a minimum, both for performance reasons and for sustainability. Remember, YAGNI: you aren’t gonna need it. Keep it lean and mean and you won’t regret it.

I am pretty good with semantic HTML, but I’ve been doing it for decades. That said, I learn something new almost every day.

Years of working on assembly-line, commodity code in enterprise with React and MUI and similar libraries nearly destroyed my knowledge of CSS, which was never that great. In building Craft Code I have had to learn or re-learn an enormous amount. And I’m still embarrassingly weak.

So there is lots left to do on the CSS side, but if you wait for perfection before you ship, you will never ship. I’ve made that mistake too many times. So here it is, warts and all:

My grasp of functional JavaScript is reasonably good. I might even be able to write a monad tutorial (but I won’t). I gave up on OOP a long time ago (fifteen years now!). I just can’t see a situation in which it is better than FP, and usually a lot worse. Of course, with JS I am regularly forced to deal with some OO, but I remember enough (SOLID and all that, design patterns, etc.) to get by.

Ha, ha. My grasp of TypeScript types is a lot less impressive. I prefer types to interfaces because, hello, the latter are mutable. Not very FP. So far I’ve gotten by mostly without interfaces, but then I’m not doing OOP.

But I still have a lot of red squiggly lines in my TS files and I’ll be damned if I can figure out what the hell is wrong. And huge “error” messages filled with ellipses don’t help.

(I figured out how to force the ellipses to render the hidden content. Sadly, that didn’t help much. If it tells me what I’m missing then it’s an easy fix. But if it doesn’t, I’m often helpless. It’s trial and error time. I do way, way too much casting. I never had this kind of problem with Scala, so maybe it’s a TypeScript thing.)

So for the Craft Code site, here is my self-completed report card so far:

HTML: A or A+

CSS: B-

JS: B+

TS: C

Accessibility: A

Performance: A

Sustainability: B

Sorry, Dad! Please don’t ground me. This is a lot to learn! I’m trying.

Still, here’s the home page in Lynx:

Not bad, eh? That seems pretty workable. Pages load in under a second. They are navigable by keyboard. There are skip links (multiple). Plenty of ARIA labels and describedBys. I’m pretty happy with it so far.

And that’s what I’ve been doing for six months or more. Updates as I move forward, and more Cantankering coming soon now that I have a breather.

Whew!